Mar 10, 2016

The ongoing five-game series, played in Seoul and livestreamed nightly on YouTube, is a surprisingly high-publicity contest for a computer system that wasn’t supposed to even be possible for another 10 years. Sedol and the founders of DeepMind were front page news in South Korea on game day. More than 90,000 people worldwide watched the livestream during its first match on March 9 — and at least that many saw AlphaGo win.

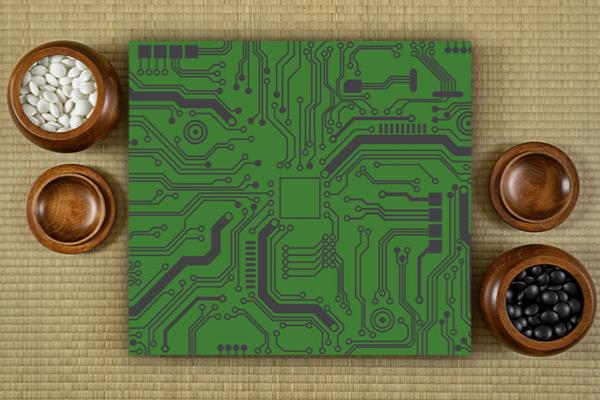

If it goes on to win the whole series, AlphaGo will do what many have long considered impossible: beat humans at their own best game.

Read the Full Article

Already a subscriber? Login