“Why do you look at the speck of sawdust in your brother's eye and pay no attention to the plank in your own eye?” —Matthew 7:4

“The first principle is that you must not fool yourself, and you are the easiest person to fool.” —Richard Feynman, physicist

Election years are tough on science and numbers. Everywhere you go yet another politician or one of their supporters is citing numbers, throwing them out there, claiming their support, and reminding everyone that if we just followed the evidence we’d all end up agreeing with them. Their opponents, of course, claim the same thing. This is bad enough in formal debates, but when you wade in to Facebook or Twitter, it gets downright overwhelming. Everyone has “science on their side” or “the numbers back them up.”

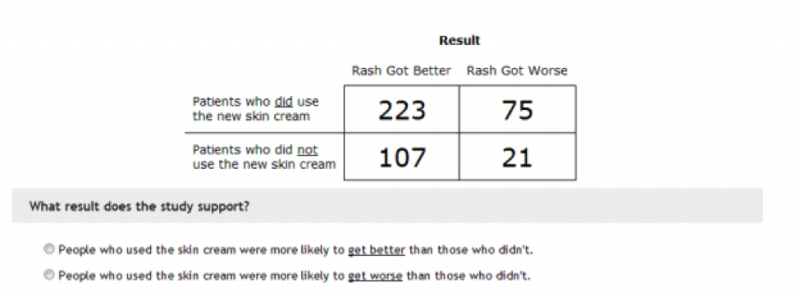

Have you ever wondered why this happens? How two people can look at objective numbers and see entirely different things? Why your friends with differing viewpoints are never swayed by the studies or numbers you provide, and why every time they share their evidence you walk away unconvinced? Well, in 2013 the working paper "Motivated Numeracy and Enlightened Self-Government," some researchers gave us some insight. They gave subjects a math quiz that contained a problem about patients with a rash using a new skin cream, and asked them to calculate whether it worked. They provided this data:

This is a classic math skills problem because you have to really think through what question you're trying to answer before you do your calculations.

More people got better in the "use the skin cream" group, but more people also got worse. The key is to look at the ratio between the “got better” and “got worse” groups and NOT the absolute numbers. In the “use the skin cream” group, you are about 3 times as likely to get better than to get worse. In the “did not use the skin cream” group, you were 5 times as likely to get better — making that the better option.

If you had trouble with this, don’t feel bad. Baseline only about 40 percent of people in the study got this right.

However, that wasn’t the only test the researchers gave out.

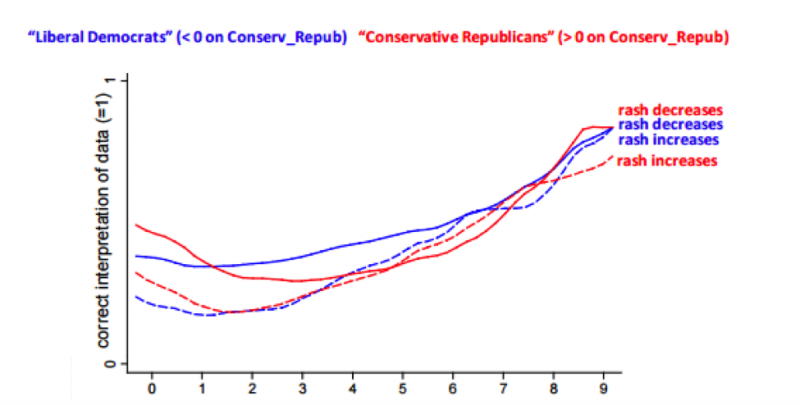

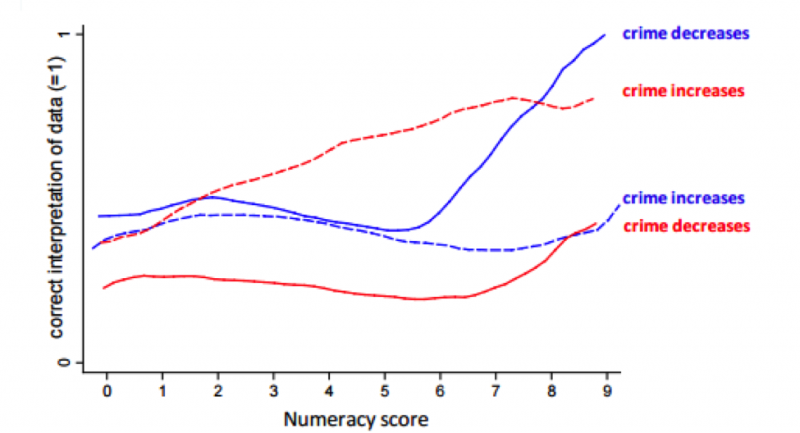

For some participants, they took the original problem, kept the numbers the same, but changed "patients" to "cities," "skin cream" to "strict gun control laws," and "rash" to "crime."

Their goal was to turn a neutral and boring question into a highly charged question that people might have strong opinions about. They also flipped the outcome around for both problems, so participants had one of four possible questions with four different answers.

In one the skin cream worked to cure a rash, and in one it made it worse; in one strict gun control worked to lower crime, and in one it made it worse. The numbers in the matrix remained the same, but the words around them flipped. They also asked people their political party and a bunch of other math questions to get a sense of their overall mathematical ability.

Here's how people did when they were assessing rashes and skin cream:

It’s pretty much what we'd expect. Regardless of political party, and regardless of the outcome of the question, people with better math skills did better. Now check out what happens when people were shown the same numbers but believed they were working out a problem about the effectiveness of gun control legislation:

Look at the end of that graph there, where we see the people with a high mathematical ability. If using their brainpower got them an answer that they liked politically, they did it. However, when the answer didn't fit what they liked politically, they were no better than those with very little skill at getting the right answer. Your intellectual capacity does NOT make you less likely to make an error — it simply makes you more likely to be a hypocrite about your errors. Yikes.

If you think about it, this sort of thing is most common in debates where strong ethical or moral stances intersect with science or statistics. You'll frequently see people discussing various issues, then letting out a sigh and saying, "I don't know why other people won't just do their research!" The problem is that if you believe something strongly already, you're quite likely to think any research that agrees with you is more compelling than it actually is. On the other hand, research that disagrees with you will look less compelling than it may be.

This isn't just a problem for the hoi polloi either. Researchers disagree frequently end up accusing each other of being motivated by ideology rather than science. We all do it, we just see it more clearly in those we disagree with. It’s called motivated reasoning, and it’s been pretty well studied for general political judgments. What is interesting about this study is it showed that it is powerful enough to erode concrete analytic skills we actually have under normal circumstances.

So why do we do this? In most cases it's pretty simple: We like to think that all of our beliefs are perfectly well reasoned and that all the facts back us up. When something challenges that assumption, we get defensive and stop thinking clearly. There's also some evidence that the Internet may be making this worse by giving us access to other people who will support our beliefs and stop us from reconsidering our stances when challenged. In fact, researchers have found that the stronger your stance toward something, the more likely you are to hold simplistic beliefs about it — beliefs like, "there are only two types of people, those who agree with me and those who don't."

Now to be clear: I am not advising anyone give up a moral stance because science or numbers might disagree. If I could prove tomorrow that feeding the poor shortened your life expectancy by 20 years, I would not think we’d be morally justified in letting people starve to death. However, when you take all of this together, you can see that science and the Bible agree: Our tendency to deceive ourselves is one of our great weaknesses. Not all evidence is going to support you all the time. Understanding your biases and proceeding with humility when you see evidence that conflicts with one of your pre-existing beliefs is a critical thing to remember both during the election season and any time you sign in to Facebook.

Got something to say about what you're reading? We value your feedback!